GNN学习笔记

GNN从入门到精通课程笔记

2.4 Struc2Vec (KDD ‘17)

- struc2vec: Learning Node Representations from Structural Identity

Key ideas

- Structural similarity does not depend on hop distance

- neighbor nodes can be different, far away nodes can be similar

- Structural identity as a hierarchical concept

- depth of similarity varies

- Flexible four step procedure

- The operational aspect of steps is flexible

Procedure

- Step 1: Structural Similarity

- 设计了一种衡量两个节点Structural Similarity的方法,这个方法只以来与节点(及其邻居)的度有关

- Hierarchical measure for structural similarity between two nodes

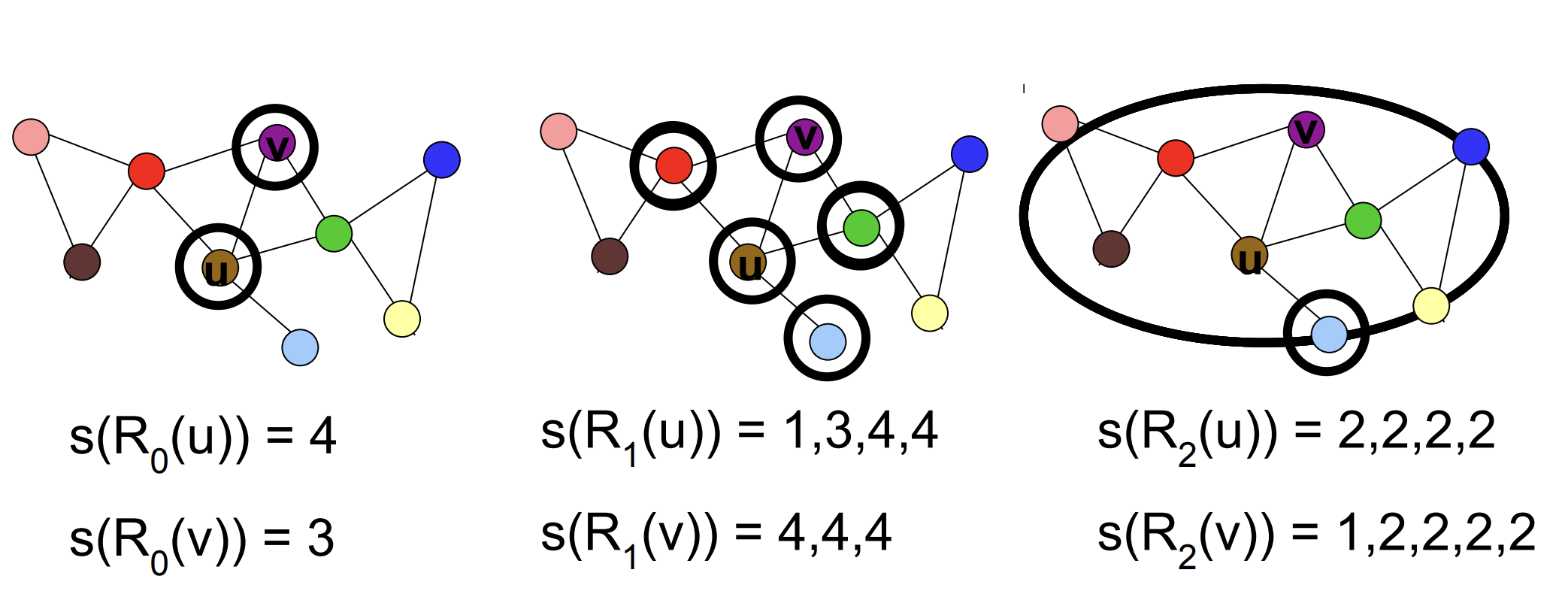

- R_k(u): set of nodes at distance k from u (ring)

- s(S): ordered degree sequence of set S

- g(D1 ,D2): distance between two ordered sequences

- cost of pairwise alignment: max(a,b) / min(a,b) -1

- optimal alignment by DTW

- f_k(u,v): structural distance between nodes u and v considering first k rings

- f_k(u,v) = f_{k-1}(u,v) + g(s(R_k(u)), s(R_k(v)))

- g(s(R_k(u)), s(R_k(v))): 在第k个Ring上,u和v的距离

- f_k(u,v): 在第0-k个Ring上,u和v的距离的和

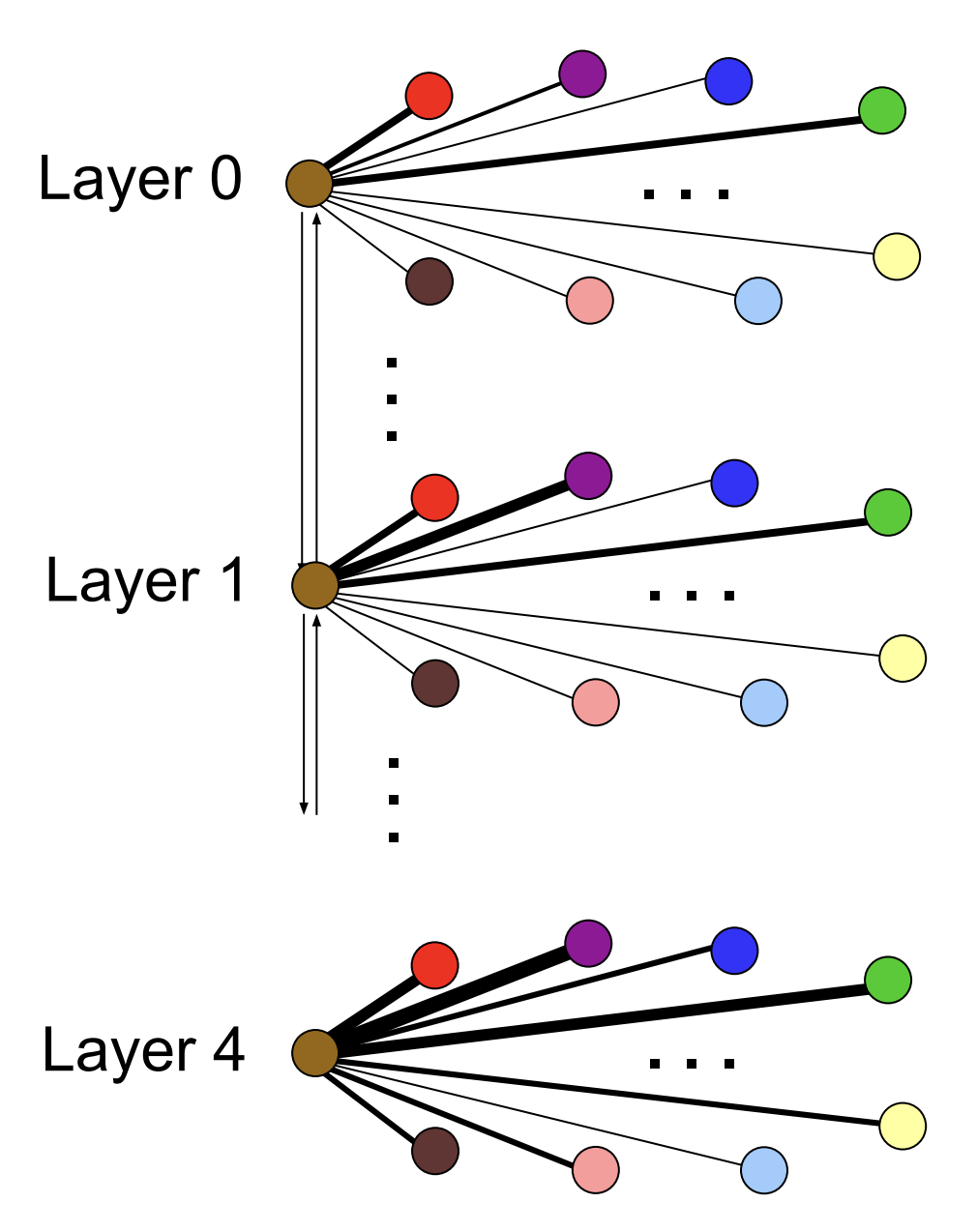

- Step 2: Multi-layer Graph

- Each layer is weighted complete graph

- corresponds to similarity hierarchies

- Edge weights in layer k

- w_k (u,v) = exp{-f_k(u,v)}

- 把在第k个Ring上u和v的距离映射到Layer k上

- Connect corresponding nodes in adjacent layers

- Each vertex is connected to its corresponding vertex in the layer above and below (layer permitting).

- The edge weight between layers

- w(u_k,u_{k+1}) = log(\Gamma_k(u) + e)

- \Gamma_k(u) = \Sigma 1{w_k(u,v)>\bar{w_k}}

- \Gamma_k(u): The similarity of node u to other nodes in layer k.

- If u has many similar nodes in the current layer, then it should change layers to obtain a more refined context.

- By moving up one layer, the number of similar nodes can only decrease.

- w(u_k,u_{k-1}) = 1

- w(u_k,u_{k+1}) = log(\Gamma_k(u) + e)

- Each layer is weighted complete graph

- Step 3: Generate Context

- Context generated by biased random walk

- walking on multi-layer graph

- Walk in current layer with probability p

- choose neighbor according to edge weight

- RW prefers more similar nodes

- Change layer with probability 1-p

- choose up/down according to edge weight

- RW prefer layer with less similar neighbors

- Context generated by biased random walk

- Step 4: Learn Representation

- For each node, generate set of independent and relative short random walks

- Train a neural network to learn latent representation for nodes

- Skip-gram

- Hierarchical Softmax

Conclusion:

- struc2vec helps classification based on roles